Kubernetes as a project is maturing, support has been increased from nine to 12 months, and there’s a new protocol in place to ensure a steady progress on feature development. Also, many of its new features are meant to improve the quality of life of its users, like Generic ephemeral inline volumes, or the structured logging.

Of the 34 enhancements in this version, 10 are completely new, eight are graduating to Stable, two are management changes on the Kubernetes project, and the other 14 are existing features that keep improving.

Here is the detailed list of what’s new in Kubernetes 1.19.

Kubernetes 1.19 – Editor’s pick:

These are the features that look most exciting to us in this release (ymmv):

#1498 Increase Kubernetes support window to one year

A major version upgrade requires a lot of planning; the more core the software is to your infrastructure, the more cautious you must be. What are the breaking changes? Are all of my existing tools ready for the update? Having three extra months to plan this upgrade will take a lot of pressure from cluster administrators and will help keep the cloud more secure.

#1693 Warning mechanism for use of deprecated APIs

Talking about updates, one of the most painful parts of planning a major upgrade is looking for alternatives for what will become deprecated. This little change will make developers and cluster administrators aware of those upcoming deprecations. Hopefully, this will allow them to plan ahead, removing some of that “upgrade stress.”

#1635 Require Transition from Beta

New features are always welcome, but the road to general availability is full of painful, breaking changes for the early adopters. So, if features reach stability faster, users will be able to enjoy its benefits sooner, and early adopters won’t have to suffer so many changes.

That’s why we are happy to see this commitment in Kubernetes to push things forward and complete features before starting to play with new ones.

#1698 Generic ephemeral inline volumes

Yet another user friendly update. Most containers just need a volume to store ephemeral data. This generic and simpler way of defining volumes will reduce the complexity of config files. And this not only makes things easier for current Kubernetes users, but will also soften the learning curve, making things easier for newcomers.

#1739 kubeadm: customization with patches

Alternative configurations for each environment (prod, dev, test) simplify bootstrapping infrastructure, which is key to DevOps. Configuration patching further streamlines maintaining this process. And finally, choosing a standard tool across the whole project (raw patches like kubectl, in this case) keeps things simple and we stay a step further from going crazy. Thanks kubeadm team!

Kubernetes 1.19 core

#1498 Increase Kubernetes support window to one year

Stage: Major Change to Stable

Feature group: release

Support for Kubernetes will be increased from nine months (or three releases) to one year.

Currently, minor updates (e.g. 1.18.X) are released up to nine months after the main release (e.g. 1.18), forcing cluster operators to upgrade every nine months if they want to remain supported. However, this is an irregular period given the amount of planning required for such a critical update.

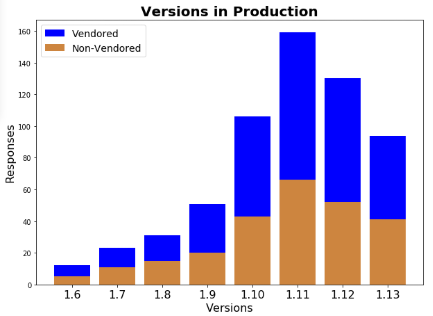

A survey conducted in early 2019 by the LTS working group showed that many Kubernetes end-users fail to upgrade within this nine-month support period. See the graph below; back then, only versions 1.11 to 1.13 were still supported, meaning that almost one-third of Kubernetes users were running an unsupported version in production.

This support window increase to 12~14 months means that around 80% of the Kubernetes users will be supported.

It’s important to note the big impact this change will have on the Kubernetes project, as having one more version to apply patches to test, and release, will increase the pressure on all Kubernetes working groups.

#1635 Require Transition from Beta

Stage: Major Change to Stable

Feature group: architecture

We’ve previously talked about enhancements, like Ingress, that stay on Beta for too long. It looks like once an enhancement reaches Beta, as it’s enabled by default and people start to use it, motivation to make it stable diminishes.

This enhancement defines a new policy on how Beta enhancements are treated, in hopes of increasing the amount of features that graduates to Stable.

Once a feature graduates to Beta, it will be deprecated in nine months (three releases) unless:

- It meets GA criteria and graduates to Stable.

- Or, a new beta version is prepared, deprecating the previous one.

For example, the Beta APIs introduced on 1.19 will be deprecated no later than 1.22 and removed no later than 1.25.

To make users aware of these changes, new warnings will be added when using deprecated API calls. See #1693, the next point, for more information.

#1693 Warning mechanism for use of deprecated APIs

Stage: Beta

Feature group: api-machinery

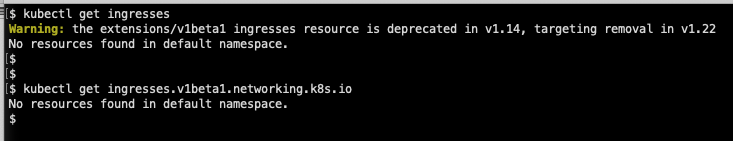

This enhancement is partly motivated by #1635 Require Transition from Beta, and partly to make it easier for users and cluster administrators to recognize and remedy the use of deprecated APIs.

From now on, the API server will include a warning header with deprecation information. This includes when an API was introduced, when it will be deprecated, and when it will be removed. It can include extra remediation information, like if there’s a new version available and what are the migration steps.

Some clients, like kubectl, are already updated to display this information to the user.

#1623 Provide recommended .status.conditions schema

Stage: Graduating to Stable

Feature group: api-machinery

Many APIs provide a .status.conditions field that is useful for debugging. You can use this field, for example, to query a pod lifecycle:

# kubectl -n cert-manager get pods -oyaml

kind: Pod

status:

conditions:

- lastTransitionTime: "2019-10-22T16:29:24Z"

status: "True"

type: PodScheduled

…

- lastTransitionTime: "2019-10-22T16:29:31Z"

status: "True"

type: Ready

However, the contents of the .status.conditions field are not standard among APIs. This enhancement defines some common fields and related methods to be implemented by new APIs.

#1143 Clarify use of node-role labels within Kubernetes and migrate old components

Stage: Graduating to Beta

Feature group: architecture

Against the usage guidelines, some core and related projects started depending on labels from the node-role.kubernetes.io namespace to vary their behaviour, which could lead to problems in some clusters.

This feature summarizes the work done to clarify the proper use of the node-role labels so they won’t be misused again, and it removes the dependency on them where needed.

Read more in the release for 1.17 of the What’s new in Kubernetes series.

#1333 Enable running conformance tests without beta REST APIs or features

Stage: Graduating to Beta

Feature group: architecture

This enhancement collects the work done to ensure that neither the Kubernetes components nor the Kubernetes conformance depend on beta REST APIs or features. The end goal is to ensure consistency across distributions, as non-official distributions like k3s, Rancher, or Openshift shouldn’t be required to enable non-GA features.

Initially introduced on Kubernetes 1.18, this feature now graduates to Beta.

#1001 Support CRI-ContainerD on Windows

Stage: Graduating to Beta

Feature group: windows

ContainerD is an OCI-compliant runtime that works with Kubernetes and has support for the host container service (HCS v2) in Windows Server 2019. This enhancement introduces ContainerD 1.3 support in Windows as a Container Runtime Interface (CRI).

Read more in the release for 1.18 of the What’s new in Kubernetes series.

Authentication in Kubernetes 1.19

#266 Kubelet Client TLS Certificate Rotation

Stage: Graduating to Stable

Feature group: auth

Introduced on Kubernetes 1.7 and in Beta since Kubernetes 1.8, this enhancement finally reaches GA.

This enhancement covers the process to obtain the kubelet certificate and rotate it as its expiration date approaches. This certificate/key pair is used by kubelet to authenticate against kube-apiserver. You can read more about this process in the Kubernetes documentation.

#279 Limit node access to API

Stage: Graduating to Stable

Feature group: auth

Node labels are a very useful mechanism when scheduling workloads. For example, you can use kubernetes.io/os to tag hosts as linux or windows, and that helps the scheduler to deploy pods on the right node.

However, since nodes are owners of their own labels, a malicious actor could register a node with specific labels to capture dedicated workloads and secrets.

This enhancement summarizes the work done on the NodeRestriction admission plugin since Kubernetes 1.13, to prevent Kubelets from self-setting labels within core namespaces like k8s.io and kubernetes.io. You can read the details on the enhancement proposal.

#1513 CertificateSigningRequest API

Stage: Graduating to Stable

Feature group: auth

Each Kubernetes cluster has a root certificate authority that is used to secure communication between core components, which are handled by the Certificates API. This is convenient, so eventually it started to be used to provision certificates for non-core uses.

This enhancement aims to embrace the new situation, adding a Registration Authority figure to improve both the signing process and its security.

Read more in the release for 1.18 of the What’s new in Kubernetes series.

Kubernetes 1.19 kubeadm configurations

#1381 New kubeadm component config scheme

Stage: Alpha

Feature group: cluster-lifecycle

kubeadm generates, validates, defaults, and stores configurations for several services of the cluster. The current implementation uses internal component configs, like for kubelet and kube-proxy, that carry several problems.

For example, including default values in configurations complicates maintenance. Are those values intended or just defaulted? When a default changes in a new version, should you keep the old value or the new one?

Also, including this code inside kubeadm is a potential point of divergence between vendors.

A complete revamp is in the works for how kubeadm manages these configurations. These changes include stopping defaulting component configs and delegating config validation.

#1739 kubeadm: customization with patches

Stage: Alpha

Feature group: cluster-lifecycle

In Kubernetes 1.16, #1177 Advanced configurations with kubeadm (using Kustomize) was introduced to allow patching base configurations and obtaining configuration variants. For example, you can have a base configuration for your service, then patch it with different limits for each of your dev, test, and prod environments.

The kubeadm team has now decided to use raw patches instead, in a similar way kubectl does. This avoids adding a complex dependency like Kustomize.

For this, a new flag --experimental-patches has been added, mirroring the existing --experimental-kustomize, and once the feature reaches Beta, it will be renamed to --patches.

You would use it like:

kubeadm init --experimental-patches kubeadm-dev-patches/

Kubernetes 1.19 instrumentation

#1602 Structured logging

Stage: Alpha

Feature group: instrumentation

Currently, the Kubernetes control plane logs aren’t following any common structure. This complicates parsing, processing, storing, querying, and analyzing logs.

This enhancement defines a standard structure for Kubernetes log messages, like:

E1025 00:15:15.525108 1 controller_utils.go:114] "Failed to update pod status" err="timeout"

To achieve this, methods like InfoS and ErrorS have been added to the klog library. You can find more details in the Kubernetes 1.19 documentation.

#383 Redesign Event API

Stage: Graduating to Stable

Feature group: instrumentation

This effort has two main goals – reduce the performance impact that Events have on the rest of the cluster, and add more structure to the Event object in order to enable event analysis.

Read more in the release for 1.15 of the What’s new in Kubernetes series.

Kubernetes 1.19 network

#1453 Graduate Ingress to V1

Stage: Major Change to Stable

Feature group: network

An Ingress resource exposes external HTTP and HTTPS routes as services, which are accessible to other services within the cluster. After being introduced in Kubernetes 1.1 this API now reaches GA.

This version includes several changes, like the ServiceName and ServicePort fields are now service.name and service.port. Check the Kubernetes 1.19 documentation for more details.

Read more about Ingress in the release for 1.18 of the What’s new in Kubernetes series.

#752 EndpointSlice API

Stage: Major Change to Beta

Feature group: network

The new EndpointSlice API will split endpoints into several Endpoint Slice resources. This solves many problems in the current API that are related to big Endpoints objects. This new API is also designed to support other future features, like multiple IPs per pod.

In 1.19, kube-proxy will use EndpointSlices by default on Linux. On Windows it will support them on alpha state. Check the roll out plan for more info.

Read more in the release for 1.16 of the What’s new in Kubernetes series.

#1507 Adding AppProtocol to Services and Endpoints

Stage: Graduating to Beta

Feature group: network

The EndpointSlice API added a new AppProtocol field in Kubernetes 1.17 to allow application protocols to be specified for each port. This enhancement brings that field into the ServicePort and EndpointPort resources, replacing non-standard annotations that are causing a bad user experience.

Initially introduced in Kubernetes 1.18, this enhancement now graduates to Beta.

#614 SCTP support for Services, Pod, Endpoint, and NetworkPolicy

Stage: Graduating to Beta

Feature group: network

SCTP is now supported as an additional protocol alongside TCP and UDP in Pod, Service, Endpoint, and NetworkPolicy.

Introduced in Kubernetes 1.12, this feature finally graduates to Beta.

Kubernetes 1.19 nodes

#1797 Allow users to set a pod’s hostname to its Fully Qualified Domain Name (FQDN)

Stage: Alpha

Feature group: node

Now, it’s possible to set a pod’s hostname to its Fully Qualified Domain Name (FQDN), which increases the interoperability of Kubernetes with legacy applications.

After setting hostnameFQDN: true, running uname -n inside a Pod returns foo.test.bar.svc.cluster.local instead of just foo.

You can read more details in the enhancement proposal.

#1867 Kubelet Feature: Disable AcceleratorUsage Metrics

Stage: Alpha

Feature group: node

With #606 (Support 3rd party device monitoring plugins) and the PodResources API about to enter GA, it isn’t expected for Kubelet to gather metrics anymore.

This enhancement summarizes the process to deprecate Kubelet collecting those Accelerator Metrics.

#693 Node Topology Manager

Stage: Major Change to Beta

Feature group: node

Machine learning, scientific computing, and financial services are examples of systems that are computational intensive and require ultra low latency. These kinds of workloads benefit from isolated processes to one CPU core rather than jumping between cores or sharing time with other processes.

The node topology manager is a kubelet component that centralizes the coordination of hardware resource assignments. The current approach divides this task between several components (CPU manager, device manager, CNI), which sometimes results in unoptimized allocations.

In 1.19, two minor enhancements were added to this feature: GetPreferredAllocation() and GetPodLevelTopologyHints().

Read more in the release for 1.16 of the What’s new in Kubernetes series.

#135 Seccomp

Stage: Graduating to Stable

Feature group: node

Seccomp provides sandboxing, it reduces the actions that a process can perform which reduces a system potential attack surface. Seccomp is one of the tools Kubernetes uses to keep your Pods secure.

After several versions in Alpha, this enhancement finally brings into GA the ability to customize what seccomp profile is applied to a Pod (using Pod Security Policies).

#1547 Building Kubelet without Docker

Stage: Graduating to Stable

Feature group: node

This enhancement is part of the effort to remove the dependency on the docker/docker Golang package, and move it out-of-tree to make Kubelet easier to maintain.

In particular, this enhancement’s only goal is to make sure Kubelet compiles and works without said docker dependency, but it doesn’t cover the work to move the docker code out-of-tree.

Kubernetes 1.19 scheduler

#1258 Add a configurable default Even Pod Spreading rule

Stage: Alpha

Feature group: scheduling

In order to take advantage of even pod spreading, each pod needs its own spreading rules and this can be a tedious task.

This enhancement allows you to define global defaultConstraints that will be applied at cluster level to all of the pods that don’t define their own topologySpreadConstraints.

#895 Even pod spreading across failure domains

Stage: Graduating to Beta

Feature group: scheduling

With topologySpreadConstraints, you can define rules to distribute your pods evenly across your multi-zone cluster, so high availability will work correctly and the resource utilization will be efficient.

Read more in the release for 1.16 of the What’s new in Kubernetes series.

#785 Graduate the kube-scheduler ComponentConfig to v1beta1

Stage: Graduating to Beta

Feature group: scheduling

ComponentConfig is an ongoing effort to make component configuration more dynamic and directly reachable through the Kubernetes API.

Its API for kube-scheduler has been maturing on Alpha for some versions, and now it’s ready to start its journey to GA. You can check the scheduler configuration options in the Kubernetes 1.19 documentation.

#902 Add non-preempting option to PriorityClasses

Stage: Graduating to Beta

Feature group: scheduling

Currently, PreemptionPolicy defaults to PreemptLowerPriority, which allows high-priority pods to preempt lower-priority pods.

This enhancement adds the PreemptionPolicy: Never option, so Pods can be placed in the scheduling queue ahead of lower-priority pods, but they cannot preempt other pods.

Read more in the release for 1.15 of the What’s new in Kubernetes series.

#1451 Run multiple Scheduling Profiles

Stage: Graduating to Beta

Feature group: scheduling

This enhancement allows you to run one scheduler with different configurations, or profiles, instead of running one scheduler for each configuration, which can lead to race conditions.

Read more in the release for 1.18 of the What’s new in Kubernetes series.

Kubernetes 1.19 storage

#1698 Generic ephemeral inline volumes

Stage: Alpha

Feature group: storage

There are several ways you can define ephemeral volumes; those are volumes that are created for specific pods and deleted after the pod terminates.

However, for the ephemeral volume drivers implemented directly in Kubernetes (EmptyDir, Secrets, ConfigMap) its functionality is limited to what is implemented inside Kubernetes. And for CSI ephemeral volumes to work, the CSI driver must be updated.

This enhancement provides a simple API to define inline ephemeral volumes that will work with any storage driver that supports dynamic provisioning. This is one example of how generic ephemeral volumes can be used:

apiVersion: apps/v1

kind: DaemonSet

…

spec:

…

- name: scratch

ephemeral:

metadata:

labels:

type: fluentd-elasticsearch-volume

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "scratch-storage-class"

resources:

requests:

storage: 1Gi

You can get further details in the enhancement proposal.

#1472 Storage Capacity Tracking

Stage: Alpha

Feature group: storage

This enhancement tries to prevent pods to be scheduled on nodes connected to CSI volumes without enough free space available.

This will be done by gathering capacity information, and then storing that information in the API server so it is available to the scheduler.

#1682 Allow CSI drivers to opt-in to volume ownership change

Stage: Alpha

Feature group: storage

Before a CSI volume is bind mounted inside a container, Kubernetes modifies the volume ownership via fsGroup. However, not all volumes support these operations, like NFS, which can result in errors reported to the user.

Currently, Kubernetes uses some heuristics to determine if the volume supports fsGroup-based permission change, but those heuristics don’t always work and cause problems with some storage types.

This enhancement adds a new field called CSIDriver.Spec.SupportsFSGroup that allows the driver to define if it supports volume ownership modifications via fsGroup. This field can have the following values: OnlyRWO matches the current behavior, Never instructs to not try to modify the volume ownership, and Always instructs to always attempt to apply the defined fsGroup.

#1412 Immutable Secrets and ConfigMaps

Stage: Graduating to Beta

Feature group: storage

A new immutable field has been added to Secrets and ConfigMaps. When set to true, any change done in the resource keys will be rejected. This protects the cluster from accidental bad updates that would break the applications.

A secondary benefit derives from immutable resources. Since they don’t change, Kubelet doesn’t need to periodically check for their updates, which can improve scalability and performance.

Read more in the release for 1.18 of the What’s new in Kubernetes series.

#1490 Azure disk in-tree to CSI driver migration

Stage: Graduating to Beta

Feature group: storage

This enhancement is part of the, #625 In-tree storage plugin to CSI Driver Migration, effort.

Storage plugins were originally in-tree, inside the Kubernetes codebase, increasing the complexity of the base code and hindering extensibility. Moving all of this code to loadable plugins will reduce the development costs and will make it more modular and extensible.

This enhancement will replace the internals of the in-tree Azure Disk Plugin with calls to the Azure Disk CSI Driver, helping the migration while maintaining the original API.

#1491 vSphere in-tree to CSI driver migration

Stage: Graduating to Beta

Feature group: storage

Also, part of the #625 In-tree storage plugin to CSI Driver Migration effort.

The CSI driver for vSphere is already stable, so the migration of the in-tree code can continue its progress.

That’s all, folks! Exciting as always; get ready to upgrade your clusters if you are intending to use any of these features.

If you liked this, you might want to check out our previous ‘What’s new in Kubernetes’ editions:

- What’s new in Kubernetes 1.18

- What’s new in Kubernetes 1.17

- What’s new in Kubernetes 1.16

- What’s new in Kubernetes 1.15

- What’s new in Kubernetes 1.14

- What’s new in Kubernetes 1.13

- What’s new in Kubernetes 1.12

And if you enjoy keeping up to date with the Kubernetes ecosystem, subscribe to our container newsletter, a monthly email with the coolest stuff happening in the cloud-native ecosystem.